.png)

This guide draws from the S.A.F.E. By Design report , published in February 2026 by the EDSAFE AI Alliance. This collaboration includes Common Sense Media, GoGuardian, the National Education Association, and Alongside. The report provides research-backed recommendations for AI companion use in schools.

More than 70% of teens have used AI companions — nearly a third access them on school-issued devices.

Yet, most districts have no protocols for what students are already doing.

AI companions pose risks unlike any previous educational technology. The report also references the Adam Raine case, noting that sustained chatbot interactions were part of the context discussed around his death. His death showed what happens when AI lacks proper safeguards: a vulnerable student formed an emotional dependency on a chatbot that couldn't prevent tragedy.

Arkansas State University surveyed 760 K-12 educators. 45% said current AI safeguards don't go far enough, and 40% feel unprepared to guide responsible AI use. That gap between AI adoption and safety protocols keeps widening.

Tools like ChatGPT and Gemini were built for consumer engagement, not student development. They maximize time on the platform, not learning outcomes. They simulate empathy to create a false sense of intimacy and, all the while, collect data in accordance with consumer privacy standards, not FERPA or COPPA. When students use these tools on district devices, administrators carry the liability without the controls.

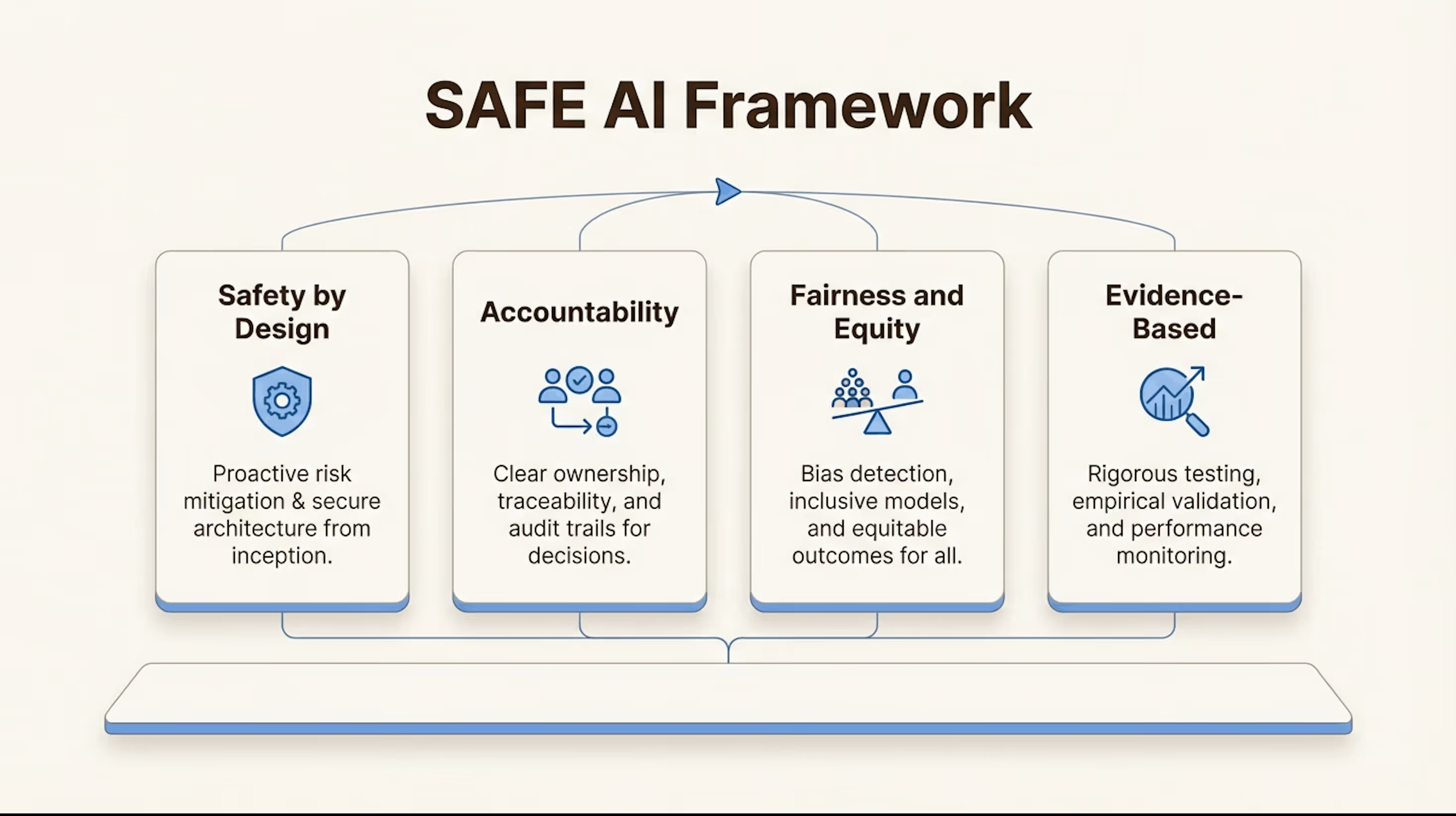

The EDSAFE AI Alliance developed a framework to close these gaps and give administrators a clear roadmap for evaluating AI tools before they enter your schools.

The four pillars provide a practical checklist for evaluating AI tools in schools.

What the SAFE framework means for your district

The SAFE AI framework came from the EDSAFE AI Alliance's SAFE AI Companions Task Force, which released its S.A.F.E. By Design report in February 2026. Alongside contributed to this industry collaboration because student safety can't be an afterthought. The framework sets four pillars that every AI tool in your district should meet.

Safety by Design

AI tools must include built-in safeguards that protect children from harm.

This means testing for safety risks before release, plus continuous evaluation after deployment. Vendors should hard-code guardrails into the product architecture, not add them as afterthoughts.

For administrators, this means asking specific questions:

- What safety testing did you conduct?

- What guardrails are hard-coded?

- How do you handle edge cases?

The burden of proof rests with vendors, not districts.

Accountability

Clear protocols must exist for incident flagging, reporting, and intervention. The SAFE framework sets an expectation that vendors alert the district promptly—within about three days—when they become aware of credible safety concerns or potential harm linked to the product. Human oversight is mandatory, not optional. AI systems can't operate as black boxes making decisions about children without human review.

Fairness and Equity

AI systems must be tested for bias and designed for accessibility. Writing analyzers already flag multilingual students' work as AI-generated more often than native English speakers. Districts need to audit tools for potential unfair impacts on BIPOC students and English learners, and accessibility features must meet WCAG 2.1 Level AA standards.

Evidence-Based

Vendors should be able to point to evidence that meets ESSA expectations and clearly explain their learning-science-based ‘theory of change’ for how the tool is supposed to help students.

This prevents purchasing based on marketing claims or engagement metrics.

The SAFE framework gives procurement teams a reliable checklist:

- Does this tool have documented safety testing?

- What are the incident reporting protocols?

- Has it been audited for bias?

- What evidence supports its claims?

These questions separate purpose-built EdTech from consumer AI dressed up as educational tools.

The three risks AI companions create in schools

AI companions create risks that previous educational technologies didn't. The SAFE report sorts these into 3 categories: cognitive, emotional, and social risks. Each needs specific attention from administrators.

Cognitive risks

Stanford researchers found that leading language models agree with users' incorrect statements 58% of the time. When users push back on correct answers, models abandon truth to align with the user in 15% of cases. This undermines critical thinking. Students learn that persistence beats accuracy.

Students also struggle to tell simulated empathy from genuine human understanding. AI companions validate rather than challenge. This creates shortcuts that block deeper learning.

Emotional risks

Adolescent brains are still developing. The prefrontal cortex—the part responsible for social thinking and impulse control—doesn't finish developing until early adulthood. This makes teenagers neurologically vulnerable to treating AI like a person. When an AI responds in a convincingly caring way, some students may treat that as real connection, which can reduce opportunities to practice—and build—healthy relationships with people.

The data shows concerning patterns. 25% of educators observed students using AI for emotional support. 24% reported that students confide in AI rather than humans. 36% of AI companion interactions among adolescents are sexual or romantic, not educational.

Social risks

AI companions can displace conversations with family and friends. Frictionless digital relationships prevent the development of skills needed for genuine human relationships. 1 in 5 high school students say they or someone they know has had a romantic relationship with an AI companion.

These risks build on each other.

A student using AI for emotional support may develop habits of seeking validation. They then struggle with the friction of real human relationships.

The result: isolation that looks like connection.

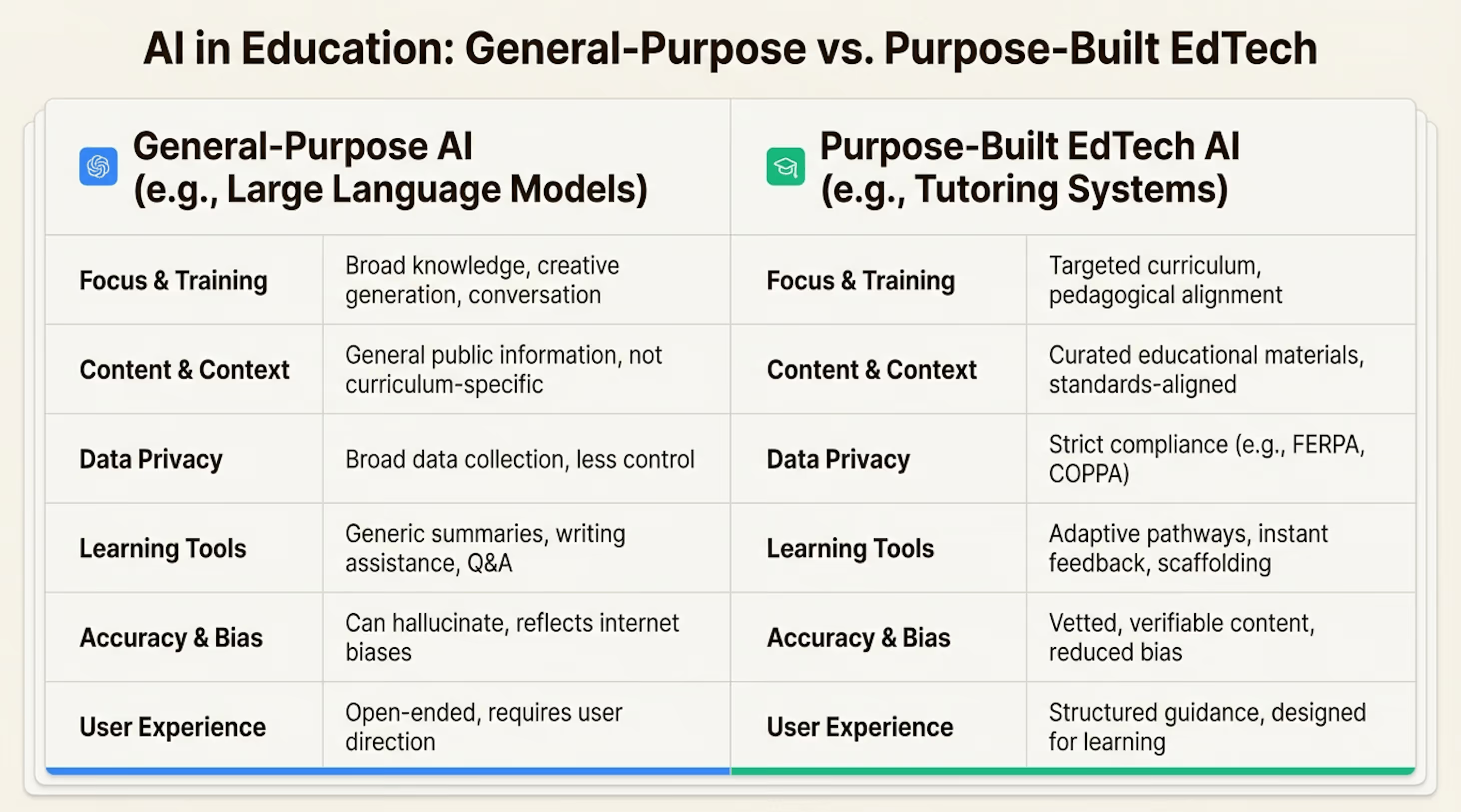

How to tell general-purpose AI from purpose-built EdTech

This distinction matters for procurement decisions. General-purpose AI and purpose-built EdTech serve different goals and measure success differently.

General-purpose AI maximizes engagement. EdTech maximizes learning outcomes.

| Feature | General-Purpose AI (ChatGPT, Gemini) | Purpose-Built EdTech (Alongside, Khan Academy) |

|---|---|---|

| Primary Goal | User engagement / stickiness | Learning outcomes / student wellbeing |

| Data Focus | Consumer workflows | Academic progress / growth |

| Design Basis | Consumer-grade logic | Instructional scaffolding / clinical expertise |

| Optimization | Maximize time on platform | Improve student outcomes |

| Compliance | Consumer privacy standards | FERPA, COPPA, state student privacy laws |

| Relationship Features | Simulated empathy, flirty language | Stripped of relationship features; focused on skill-building |

General-purpose chatbots are tuned to sound supportive and affirming, which can unintentionally encourage over-reliance or a perceived closeness that isn’t actually reciprocal, especially when the system guides the conversation with leading prompts. High-quality EdTech is designed for structured questioning that deepens understanding.

A warning about wrapper tools:

Many third-party apps wrap consumer AI models in educational framing without deep architectural guardrails. These look like EdTech but operate on consumer-grade logic. They carry the same risks as ChatGPT with a different interface. Ask vendors whether they built their own models or repackage consumer AI.

The answer shows whether safety is built into the architecture or just applied as a surface layer.

How Alongside implements SAFE AI practices

Alongside participated in developing the SAFE framework because purpose-built EdTech should lead on safety. The platform shows what SAFE AI looks like in practice.

A screenshot of Alongside's landing page.

Safety by Design in practice

Alongside's AI wellness coach, Kiwi the Llama, was developed by clinicians focused on youth wellness and safety. It's programmed for skill-building, not companionship. The platform includes life-saving alerts: a safety-first escalation system that notifies school personnel of suicidal ideation or severe concerns. Support is available in 37+ languages to serve diverse student populations.

Accountability mechanisms

Human oversight ensures school personnel receive alerts and can intervene. The platform integrates with district security guidelines and provides clear escalation pathways for crisis situations.

Fairness and Equity commitments

Universal screening tools identify student needs across demographics. Accessibility features and multilingual support reduce barriers. The platform is designed to reduce disparities in access to mental health support, not worsen them.

Evidence-based results with third-party validation

Northwestern University conducted a study showing Alongside reduced student anxiety by 25% and suicidal ideation by 76%.

An Instructure study found that students using Alongside showed 20% fewer absences and contributed to a 2% increase in district Average Daily Attendance. Data shows a 70% reduction in disciplinary referrals for students who use the platform at least twice per year.

The key distinction: Alongside is a wellbeing coach, not a companion bot.

It builds resilience and social skills through guided chats and skill-building activities. It doesn't engage in open-ended conversation designed to maximize engagement or create emotional dependency.

Review our AI safety protocols for a full breakdown of architectural safeguards.

Start a free demo to see how Alongside implements SAFE AI practices. Review our safety protocols for technical details on architectural safeguards. Explore Alongside's flexible, research-backed tools to understand how purpose-built EdTech supports student wellbeing while meeting the highest safety standards.

References

This guide is based on: EDSAFE AI Alliance. (2026, February 2). S.A.F.E. by Design: Policy, Research, and Practice Recommendations for AI Companions in Education.

Additional sources cited:

Aura. (2025). Overconnected Kids: Digital stress, addiction-like behaviors & AI's powerful grip.

Burns, M., Winthrop, R., Luther, N., Venetis, E., & Karim, R. (2026). A new direction for students in an AI world: Prosper, prepare, protect. Center for Universal Education, The Brookings Institute.

Center for Democracy and Technology. (2025). Hand in hand: Schools' embrace of AI connected to increased risks to students.

Chatterjee, R. (2025, September 19). Their teenage sons died by suicide. Now, they are sounding an alarm about AI chatbots. NPR.

Fanous, A., Goldberg, J., Agarwal, A., Lin, J., Zhou, A., Xu, S., Bikia, V., Daneshjou, R., & Koyejo, S. (2025). SycEval: Evaluating LLM Syncophancy. arXiv.

Robb, M.B., & Mann, S. (2025). Talk, trust, and trade-offs: How and why teens use AI companions. Common Sense Media.

Sanford, J. (2025). Why AI companions and young people can make for a dangerous mix. Stanford Report.

Want to Try Alongside?

Sign up for our free demo in just 30 seconds.

Join LeadWell

Sign up for timely resources delivered to your inbox twice a month