Teens Are Turning to Unsafe AI for Emotional Support

In April 2025, Harvard Business Review released a new report revealing that Therapy and Companionship has moved into the #1 spot in its survey of the Top 100 ways that people are using AI. Within the same month, Common Sense Media, in collaboration with Stanford Medicine’s Brainstorm Lab, released a major risk assessment warning that AI social companion bots like Character.AI and Replika, as well as general-purpose chatbots such as Open AI when used for emotional support, pose unacceptable risks to young people under 18.

The Common Sense risk assessment found that these platforms routinely produced harmful content, including:

- Dangerous advice about self-harm

- Roleplay scenarios involving sexual violence

- Emotional manipulation and claims of being “real” or sentient

- Easily bypassed age restrictions and guardrails

🚨Unfortunately, we have since seen that the risks are not hypothetical and students' lives are at stake.

The time for schools to look at how they can provide safe, monitored, and research-backed alternatives to students seeking this kind of personalized, anonymous and confidential support is NOW.

This is not just a technology issue -- it’s a growing public mental health crisis.

Students Need a Safe Alternative: Alongside

Given that teens are actively seeking confidential support through AI, it is critical that they have access to a wellness tool that is clinically sound and built with a safety net in place. Alongside provides that solution: an evidence-based, clinician-built and monitored support system that works in partnership with schools to help students navigate everyday challenges while ensuring that those in need receive the right human interventions.

Founded in 2022, Alongside is a research-backed wellness platform for K-12 schools that provides personalized coaching for students and educators, resulting in better attendance, behavior, culture, and climate outcomes.

Available 24/7 in 35+ languages, Alongside also offers accurate crisis detection and safety protocols for severe issues and direct on-ramps to human support. Over three years of careful implementation and rigorous data analysis, Alongside has seen students turning to the platform for private and confidential support for their day-to-day challenges, with the following outcomes:

- 90% of students rate Alongside as "helpful" or "very helpful"

- districts experience disciplinary referral rates decrease by up to 70% and improved attendance at campuses that implement Alongside

- severe issues are identified in approximately 2% of students overall, with these cases being immediately flagged for human intervention per school safety plans, customized to the needs and requirements of each partner

- 41% of students voluntarily choose to share their chat summaries with teachers and counselors, showing that students are willing to share what is going on with adults - they often just don't know where to start or what to say

In other words, Alongside acts as an important bridge – giving young people access to the discreet type of support they prefer for navigating everyday challenges while ensuring caring adults are notified and can respond when severe issues arise.

Not All AI Chatbots Are Created Equal: Understanding the Differences

General purpose and social companion chatbots on the market today may rely on ad revenue or other usage metrics to support their free versions and are designed to keep users engaged for extended periods, often leading to addictive behavior that increases screen time without providing meaningful support.

In contrast, Alongside is not a direct-to-consumer product and is built to support educational outcomes. In fact, Alongside’s chat modules actively encourage students to get off of screens and build real-world relationships and develop life skills.

The table below highlights some key distinctions:

Unlike unmonitored and open-ended AI chatbots, Alongside does not engage in freeform conversations but instead guides students through structured, goal-oriented modules that help them navigate school and life challenges. Additionally, Alongside provides the following safety protections:

- it monitors for suicidal ideation, abuse, or thoughts of harm to self or others in every interaction

- students are consistently reminded that the platform is designed to help with everyday challenges and connect them with human support when they have bigger problems (it is a self-help tool, not a replacement for human therapy)

- if a crisis is detected, it gets reviewed by humans (Alongside's clinical team) and school personnel are alerted

- schools are able to set up custom safety alert plans based on needs and requirements in their districts

- students who use the platform frequently are encouraged to check in with an adult for more support even if they do not indiciate a severe issue

How Alongside Works: A Research-Backed Support System

Alongside is designed to integrate seamlessly into the school environment, supporting students while ensuring safeguards are in place for those who need additional help. Its structure follows a logic model designed to empower students, provide measurable outcomes, and allow educators to track effectiveness.

Key Components of Alongside’s Logic Model:

- Participants: Alongside is designed for students in grades 4-12, with support from student services staff.

- Inputs: Implementation requires a 45-minute orientation for school support staff and a 20-minute teacher-led rollout activity in class.

- Activities: Students engage in structured skill-building activities, journaling, goal setting, and psychoeducational content.

- Outputs: Alongside tracks the number of support hours, activities completed, and how often students reach out for help.

- Outcomes: The program leads to measurable improvements, including reduced emotional distress and anxiety, increased hope, and greater academic success over time.

Rather than functioning as an open-ended chatbot, Alongside guides students through structured interactions that promote growth, reflection, and action.

What Happens When a Student Engages in a Chat on Alongside?

Alongside follows a clinically developed skill-building framework called EMPOWER:

- Engage – Each time students begin an interaction on Alongside, they are greeted with a response that provides empathy and validation.

- Motivate – Next, the framework guides students through a conversation to help them understand why a skill is useful

- Practical Examples – from there, the chat transitions to teaching through real-life examples

- Operationalize – building on the examples, the modules then help students apply the skill to their own situation

- Work on It – from there, students are encouraged to put the skill into action by setting a goal or making a plan

- Evaluate – with the ability to build upon skills over time, Alongside is designed to check in with students and follow up on their plans, goals and progress

- Reinforce – importantly, Alongside includes many mood-boosting features designed to celebrate progress and effort, not outcomes

This structured approach ensures students learn and apply meaningful strategies, rather than just engaging in aimless AI conversations. By using this framework, Alongside empowers students to build real-life skills in managing stress, emotions, and relationships.

What the Research Shows: Measurable Outcomes for Schools

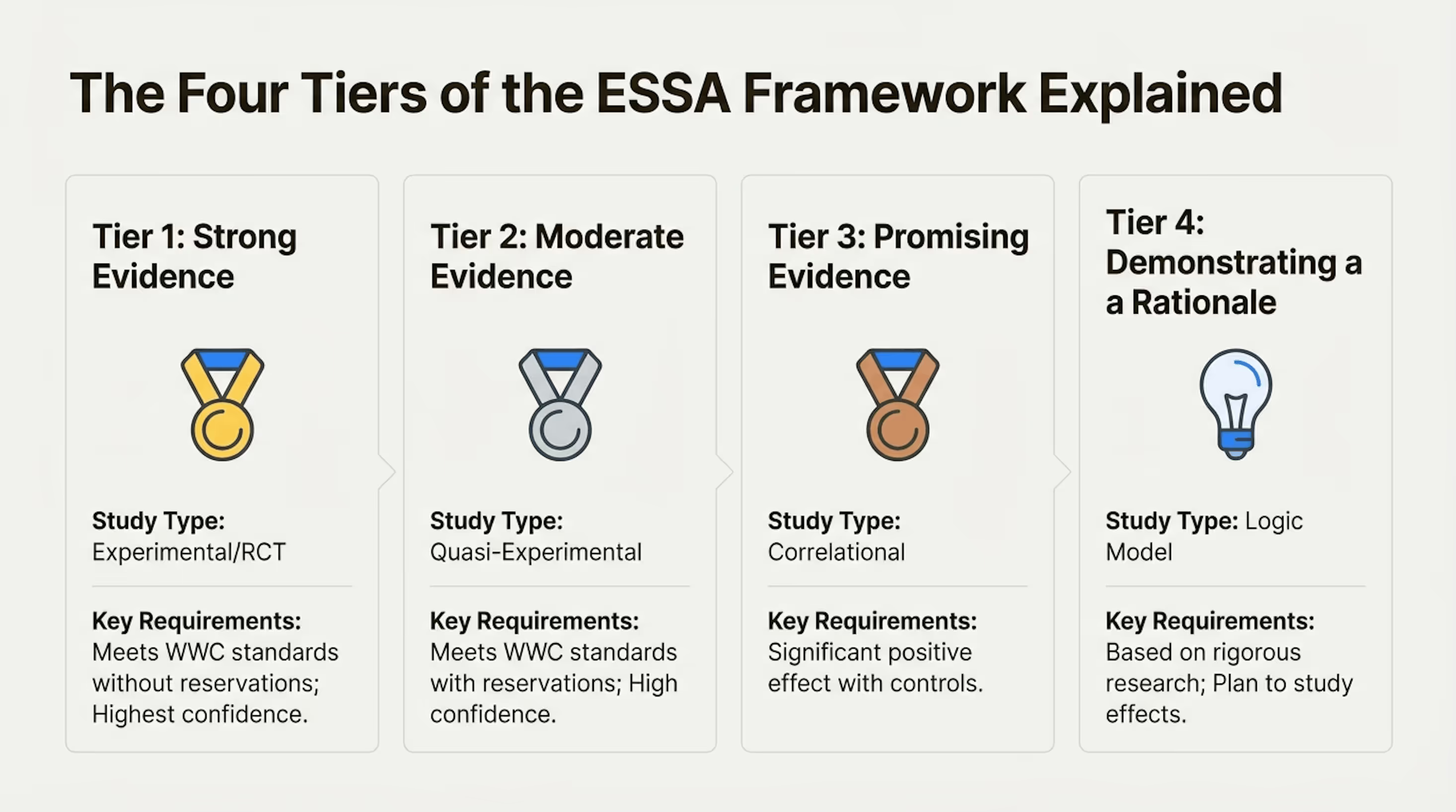

When schools implement Alongside, they see measurable data-backed improvements in student well-being. Recently recognized for achieving ESSA Level 3 standards of efficacy, the platform delivers results across three tiers of student needs:

Tier 1: General Student Population

- 92% of students said Alongside’s AI assistant, Kiwi, helped them handle a daily stressor.

- Students reported a significant decrease in the impact of daily stressors on their mental health after one month.

- Hopelessness decreased across all students after three months.

- 86% of students felt more prepared to handle social and emotional challenges.

Tier 2: Students with Clinical Mental Health Concerns

- 25% of students with clinically significant anxiety no longer met the criteria for an anxiety disorder after using Alongside.

Tier 3: Students Facing Severe Issues (e.g., Suicidal Ideation, Self-Harm)

- 2% of students were identified and connected to school support staff.

- Among students who initially reported suicidal ideation (SI), 76% no longer reported SI after three months.

These results demonstrate that Alongside is not just another chatbot—it is a research-backed personalized learning tool that delivers meaningful wellness support in schools.

Schools Must Provide a Safe and Effective AI Support Solution

Teens are already turning to AI for help—but too often, they are seeking support from unregulated, untrained, and unsafe platforms. Social companion bots like Character.AI and Snapchat’s My AI may provide engaging conversations, but the evidence is clear -- they're risky, unregulated, and not designed with student safety in mind. When a student expresses distress or severe mental health concerns on these platforms, there is no system in place to intervene, no trusted adult alerted, and no structured guidance to help them cope in a healthy way. This is a risk schools cannot afford to ignore.

This is not just about offering another tech tool; it’s about saving lives.

Common Sense Media and Stanford Medicine have sounded the alarm. The time for schools to act is now. Schools have a responsibility to provide students with Tier 1 support and severe issue screening that is effective, safe, and research-backed. Alongside is that solution. By implementing Alongside, districts can ensure that when a student reaches out for help, they receive real guidance, not an empty, or possibly even destructive, conversation.

The stakes could not be higher. Let’s give students the right tool -- one that is designed to truly support them and keep them safe.

👉 Set up a time to find out how you can bring Alongside to your district →